July 1, 2019

The perceptron is the fundamental building block of modern machine learning algorithms. While the idea has existed since the late 1950s, it was mostly ignored at the time since its usefulness seemed limited. That’s since changed in a big way.

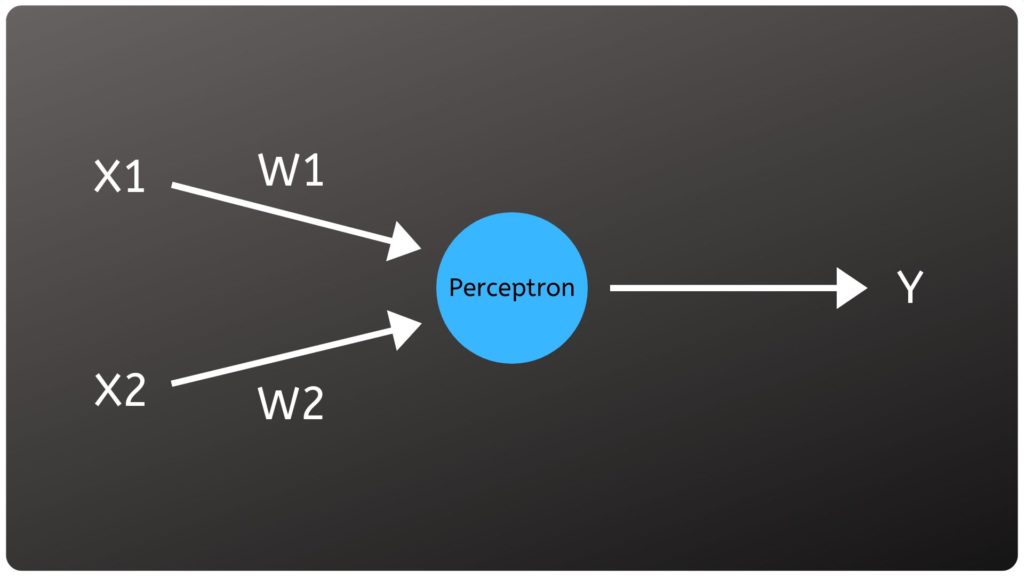

In its simplest form, it contains two inputs, and one output. These three channels constitute the entirety of its structure. There is in our case a third bias input for the single case of both our inputs being zero but we’ll ignore it for now to keep things simple.

As data flows through the perceptron, it enters the two inputs. These inputs are then altered by some weighting factor and the perceptron decides the value it wants to output based on the weighed sums of its inputs.

The simulation I’ve written was made with python and pygame. It consists of a single perceptron that will be trained to guess if the location of a point in Cartesian space is located above or below the function y = x. As the simulation runs you will notice the points changing from filled to empty to signify the perceptron’s guess. Once enough time has passed and the perceptron has crunched through enough values, its guessing will go from essentially 50/50 odds of being right to nearing 95 to 99 percent accuracy. This form of learning is called supervised machine learning because the perceptron is trained on a dataset with a desired output attached to each input. From here, neural networks are built by both adding more perceptrons and layering them together in different orientations.

The code itself consists mainly of two classes, a trainer class and the perceptron class. The trainer is simply a container for all the labeled training data we create at the beginning of the program

class Trainer:

def __init__(self, x, y, a):

self.lastGuess = 0

self.inputs = [0] * 3

self.answer = 0

self.inputs[0] = x

self.inputs[1] = y

self.inputs[2] = 1

self.answer = a

The perceptron class has 3 functions. The activate function contains what’s known in the biz as the activation function. This is what defines if the perceptron will “fire” (guess if the point is above or below) or not. There can be a bunch of customization with just this one function as far as learning efficiency is concerned but this example is kept extremely simple. The function I use is a simple step function based on if the input is greater or less than zero.

# Perceptron activation function

def activate(self, value):

if value > 0:

return .1

else:

return -.1

The second function is the feed_forward function. Here is where weights are applied before being sent to the activation function. It’s used both in training and application since both involve testing outputs based on the current weighing factor.

# Calculate outputs based on weights and inputs

# Number of inputs must be equal to amount of inputs given upon construction of class

def feed_forward(self, inputs):

weighted_sum = 0.0

for i in range(len(self.weights)):

weighted_sum += inputs[i] * self.weights[i]

return self.activate(weighted_sum)

The last perceptron function is the train function. This is where weights are adjusted based on the accuracy of the perceptrons current guess. The larger the error, the larger the change. As the perceptron becomes more accurate, the error and thus, magnitude of change will decrease

def train(self, inputs, desired):

guess = self.feed_forward(inputs)

error = desired - guess

print(self.weights)

for i in range(len(self.weights)):

self.weights[i] += self.learning_constant * error * inputs[i]

The main loop begins with the generation of the training data and moves to an infinite loop of training, printing the current guesses into a pygame window, and repeating.

if count < len(training) - 1:

ptron.train(training[count].inputs, training[count].answer)

count += 1

for i in range(count):

if count < len(training) - 1:

guess = ptron.feed_forward(training[i].inputs)

training[i].lastGuess = guess

else:

guess = training[i].lastGuess

I capped the simulation code to 2500 data points because the perceptron has usually done most of its possible learning by this point.

Check out the full code in my Github linked in the sidebar!